Agent hallucinations—when AI systems generate confident but incorrect outputs—become especially dangerous inside complex, multi-step workflows.

Rather than treating hallucinations as quirky AI behavior, enterprises must address them as a systems and workflow design problem. The solution lies in grounding, structure, validation, and thoughtful human oversight.

Why Hallucinations Increase as Workflows Get More Complex

Modern AI agents excel at pattern recognition and probabilistic reasoning. But complex workflows demand more than improvisation.

Agents must:

- Interpret ambiguous instructions

- Maintain context across multiple steps

- Combine inputs from disparate systems

- Make decisions under constraints

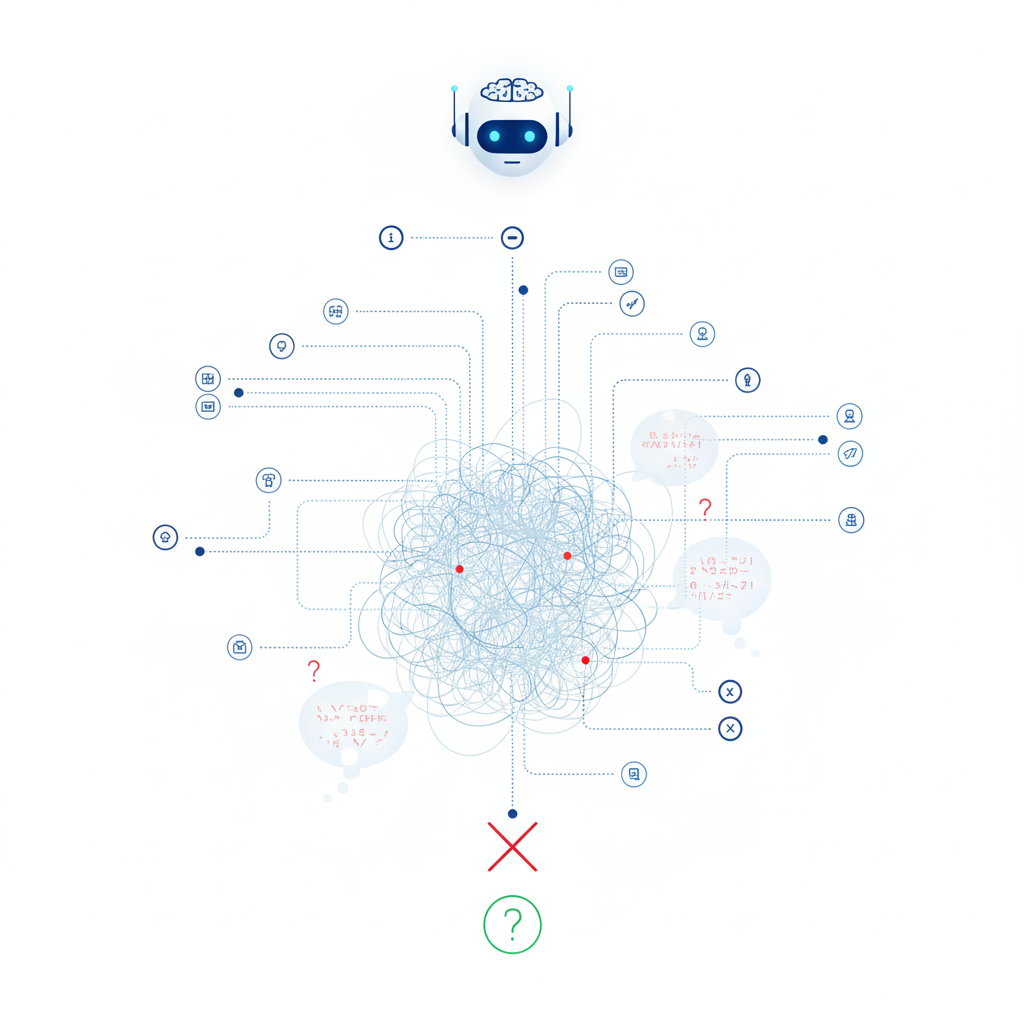

When context becomes dense or unclear, agents often fill gaps with fabricated or assumed information—hallucinating their way forward rather than asking for clarity. This behavior is tolerable in casual interactions, but unacceptable in operational, regulated, or high-impact workflows.

The Root Causes of Agent Hallucinations

1. Cognitive Overload in Monolithic Workflows

When agents are asked to manage end-to-end processes in a single pass, complexity overwhelms their reasoning ability. Too many objectives, rules, and dependencies increase the likelihood of incorrect assumptions.

2. Weak or Missing Ground Truth

Agents relying solely on internal model knowledge lack real-time verification. Without access to authoritative data sources, they confidently generate answers that sound right but aren't.

3. Ambiguous Context and Hidden Logic

Business rules embedded inside prompts or undocumented workflow logic create blind spots. Agents guess instead of reason.

Practical Strategies to Curb Hallucinations

1. Modularize the Workflow

Break complex processes into smaller, well-defined steps. Each agent interaction should have:

- A single objective

- Clear inputs and outputs

- Limited scope for interpretation

This reduces cognitive load and limits the surface area for hallucination.

2. Reinforce Context with External Knowledge

Ground agents in trusted systems:

- Databases

- Knowledge graphs

- APIs and system-of-record services

Agents should verify facts instead of inventing them.

3. Add Validation Layers

Introduce checkpoints between steps:

- Automated rule validation

- Policy and constraint checks

- Human approval for high-risk actions

Validation prevents a single hallucinated step from cascading through the workflow.

4. Make Prompts Explicit—but Don't Rely on Them Alone

Clear prompts reduce ambiguity, but prompts are not governance mechanisms. Business logic should live in structured rules, schemas, and policies—not buried inside text.

5. Guide Behavior with Examples

Few-shot and zero-shot examples help anchor agent responses, especially for edge cases. Examples act as guardrails, not replacements for structure.

6. Monitor, Measure, and Iterate

Treat hallucination reduction as an ongoing discipline:

- Track failure patterns

- Log uncertainty and overrides

- Use feedback to refine workflows and controls

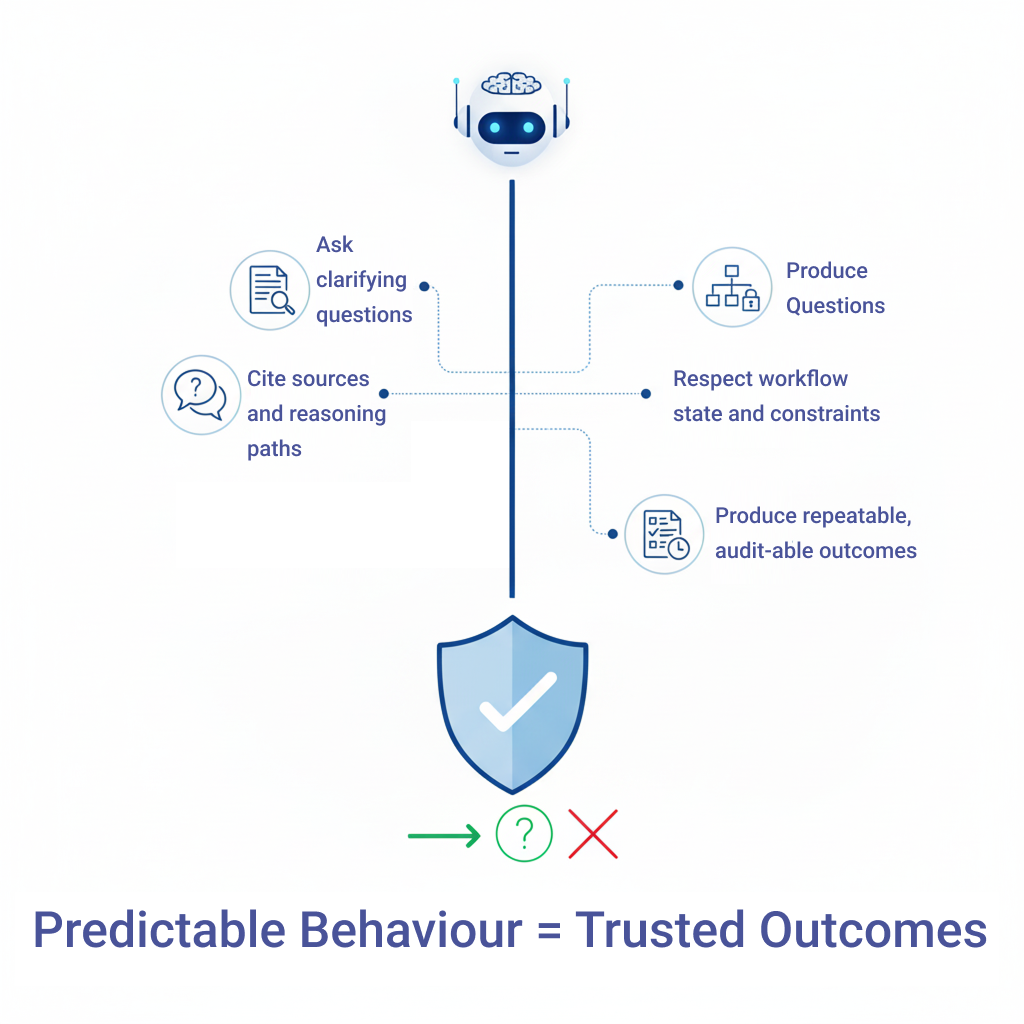

The Role of Humans in Hallucination-Free Systems

Human-in-the-loop isn't a fallback—it's a feature. Humans should intervene at:

- Decision boundaries

- Low-confidence scenarios

- Policy exceptions

This creates a safety net while preserving speed and automation where it matters.

Emerging Tooling Is Closing the Gap

Modern platforms are beginning to support:

- Confidence scoring

- Semantic validation

- Fact-checking integrations

- Inconsistency detection

These capabilities make it easier to design agent systems that recognize uncertainty instead of masking it.

Agent hallucinations aren't random glitches.

Hallucinations are symptoms of missing structure, grounding, and control.

To stop them:

- Modularize workflows

- Ground agents in real knowledge

- Validate decisions explicitly

- Keep humans involved where judgment matters

The future of agentic AI isn't smarter improvisation—it's disciplined, grounded intelligence that works reliably inside complex systems.

Cybic’s Approach to Reliable Agent Workflows

At Cybic, we see hallucinations as an architecture challenge, not just a model issue. Reliable agents need the right foundation across data, workflows, and governance.

Our methodology focuses on:

- Ontology-driven intelligence so agents understand business context, not just text.

- Structured workflow design to limit ambiguity and guide decisions.

- Validation and human oversight to catch errors before they impact operations.

- Integration with trusted enterprise data to keep outputs grounded in reality.